Description

ATDD stands for “Acceptance Test-Driven Development”. ATDD finds its root in the TDD practice. The ATDD idea seems to emerge with Lisa Crispin [Crispin 2000] who already suggests automating Acceptance Tests (AT) written before the code [Kaner 2002].

ATDD consists in [Axelrod 2018]:

- defining the ATs for each new User Stories (US)

- implementing the ATs with production code

- the minimal production code is generated to pass the ATs along with the Definition of Done (DoD)

- when all ATs pass, the US passes; it is “Done” if the DoD also passes [Schwaber 2020]

ATDD is not only for GUI but for any kind of interface the US refers to such as REST APIs, RPC or other mechanisms to address Testability of the Product Backlog.

ATs can [Axelrod 2018]

- help the organization focussing on the Customer since it improves the communication between Business Owner and the Development Team

- help the Development Team understand progress and what to test

- help both customers and Development Teams to make sure that the project is progressing in the right direction [Crispin 2000] [Deng 2009]

- force to start planning testable production code

- ensures that Testers and Scripters are involved as early as possible, allowing them to really influence the quality of the product

- be used as a regression test and allows automatic execution on a regular basis and allows the code to be safely refactored

- improve the accuracy of the development effort estimate if ATs are written beforehand (for example, during a 3 Amigos or an Example Mapping session at Sprint Refinement time).

- be used as documentation the “Agile way” and provide “executable specifications” [Ambler 2005]

- be run right after the simplest line of code supposed to implement the AT instead of waiting for a release candidate of the whole solution as per the “Shift Left” testing principle

- be repeated at higher rates if automated, rather than at the end of the SDLC, reducing thus non-compliance risks very soon

ATDD are executable specifications, it means ATDD is like a “Möbius strip”: both sides of AT are actually the same; the automation of the test script makes them runnable [Moustier 2020].

Why do we need test automation?

- automation reduces the so-called transaction costs [SAFe 2021-06]: in iterative delivery model, each time a delivery batch to be deployed bears some costs which should be kept low to enable frequent releases

- low transaction costs also reduces the holding cost [SAFe 2021-06]: delaying release will generate profit loss from Customers and Competitors may take advantage from undelivered added value

- automation provide fast, fully repeatable and precise tests [Axelrod 2018]

- it help running regression testing without any knowledge of the product

- Agile, and overall DevOps, are based on heavy automation strategy

“Test automation” has of course some drawback such as

- scripts maintenance to fit changes on interfaces such as UI or API

- test data must be maintained/migrated to match business changes and their implementation

- false positives (aka “Flaky tests”) must be chased in a Jidoka approach

- scripting is not testing and relying only on scripted test cases is not enough [Bach 2014]

- if test automation is done in silo mode, bottlenecks will raise

- automated tests must start from an initial well-known state, because a failure in the scenario aborts the test and jumps to the next one; therefore, the failure of a test should not affect the next tests and dependencies between automated tests are strongly discouraged [Axelrod 2018].

ATDD is often confused with Behavior-Driven Development (BDD). BDD is a methodology which emphasizes bridging the gap between the description of a behavior and its implementation. The behavior is described in natural language and replaces formal specifications. It comes along with tests that verify the feature [North 2006] [Axelrod 2018] [Moustier 2019-1].

While BDD is focussed on behaviors from a Customer point of view, ATDD aims to test acceptance criteria. On one hand, BDD describes the business (outside-in) and provides criteria thanks to so-called “Specifications by Examples” (SBE) [Fowler 2004]; on the other hand, ATDD enables the technical side of the AC, it transitions from outside-in to inside-out for TDD [Moe 2019].

Along with BDD, that was created by Dan North [North 2006], came a tool named “Cucumber” [Wynne 2012] [Ye 2013] which compiles a formatted natural language behavior specification into code stubs ready to embed the implementation of the test. BDD enables thus ATDD [Axelrod 2018]

This “natural language” used by Cucumber has been nicknamed “Gherkin”. Here is the structure of this natural language:

Feature: <US name>

As a <User Type>

I need to <do something>

So that <benefit of the action>

Scenario X : <scenario test name X>

Given <context X>

When <something happens>

Then <items to check with AND, THEN or BUT between items>

The amount of scenarios may differ from one US to another; providing too many for the same US is not a good practice since it will generate a US that would be too big to be handled within a Sprint.

From the Gherkin description, most BDD frameworks generate the skeleton of the unit test code. The generated unit test skeleton needs then to be filled with instructions and calls to define [Axelrod 2018]

- the initial condition - the “Given” part

- the product to the expected situation - the “When” part

- the defined checks to be done - the “Then” part

These tools are actually based on keywords that receive the implementation, just like the Keyword-Driven Testing (KDT) approach where each part of the Gherkin is considered as a keyword [Deng 2009].

Other BDD tools allow you to incorporate the documentation into the test code itself, for example:

- RSpec for Ruby

- Spectrum for Java

- MSpec for .Net

- Jasmine and Mocha for JavaScript

Impact on the testing maturity

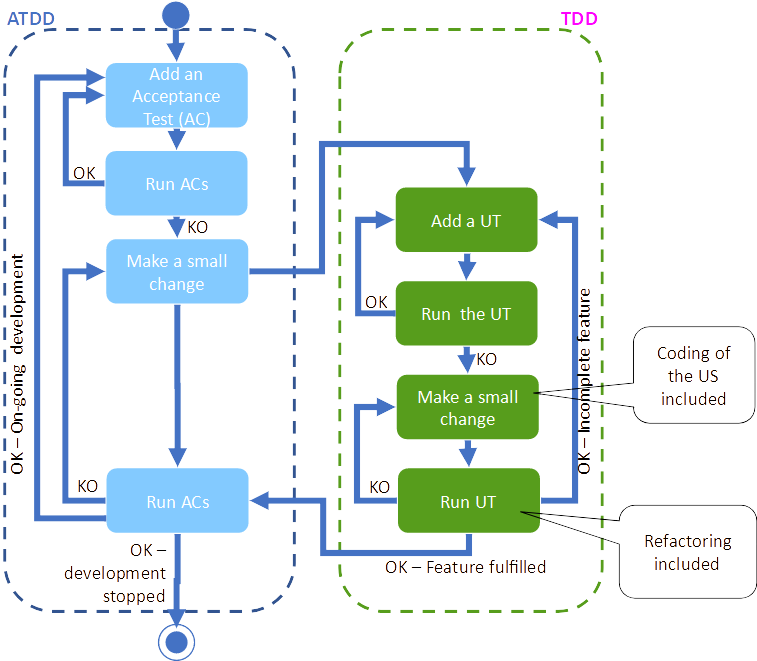

ATDD is strongly linked with TDD, because of its origin [Crispin 2000] but also because ATDD reinforces TDD by providing the skeleton of unit tests that need to be filled with calls to production code. This generates a double loop learning system [Argyris 1977] [Smith 2001] which enables providing the right solution instead of the sole compliance with unit tests.

ATDD and TDD are a double loop learning system [Ambler 2006]

This double loop learning system should also be included in a higher level loop such as the Lean Startup [Ries 2012]. This would ensure to deliver the right product instead of something that would simply be compliant with the defined US.

Lean Startup as a double loop learning mechanism over the double loop ATDD/TDD [Moustier 2020]

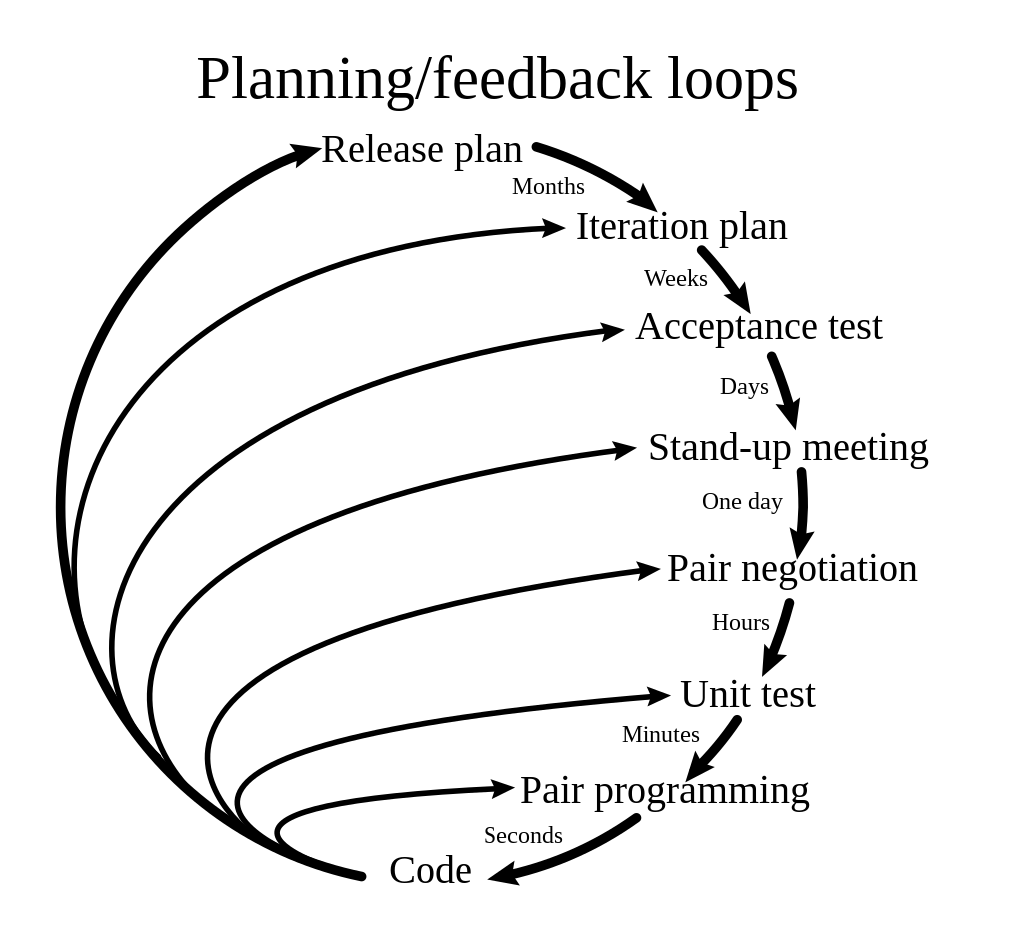

These double loop systems may include intermediate loop levels as per Extreme Programming [Beck 2004] which provides feedback at many levels and many frequencies [DonWells 2013].

Extreme Programming requires planning and feedback at many levels and many frequencies [DonWells 2013]

Since ATDD is based on BDD, non-programmers are then able to create tests [Kaner 2002] and liaise seamlessly with workers at lower levels. Both Gherkin and SBE aim to provide a means of communication that combines usage and acceptance criteria between AT and unit testing, but the "Test First" mindset can be extended to the connected dual-loop systems implemented in the organization. From the ATDD prism, the organizations come in different flavors:

- Dedicated team: complete responsibility but the Scripting Team will be disconnected from the Development Team. This configuration introduces issues some issues in a DevOps context [Axelrod 2018]; this leads to merging Scripters within Development Teams

- Isolated Scripters within Development Teams would create bottlenecks inside the Team and would reinforce the Conway’s law [Conway 1968], thus increasing issues when integrating parts

- T-Shape Team Members: to overwhelm the testing bottleneck, Developers contribute to testing by automating scripts

- Feature Teams: once skills are no longer an, the whole Team may handle E2E tests with ATDD - usually, a common goal, few relevant metrics to monitor success, and common values help the Team go beyond the Feature Team paradigm [North 2016].

Another topic that test automation draws is Test Data Management (TDM).

TDM is a major topic in test automation. Data can be generated from production data through some BI and eventually thanks to some IA [Legeard 2021]. When it comes to new features, production data will probably miss; unfortunately, ATDD is mostly regarding new features, making thus an antipattern. Moreover, Data-Driven Testing is easy at testing units level [Axelrod 2018], but when it comes to complex systems and testwares, it can be really difficult because of the amount of data to handle. To help this, data may be organized thanks to a dictionary where each test case owns its own data that should be injected (and eventually anonymized or obfuscated) in the test environment [Axelrod 2018].

At the end of the scenario, data should also be reset to enable test repetition. If tests run in parallel would touch the same data, multiple environments must be made available [Axelrod 2018] notably thanks to:

- cloud management along with a 12 Factors approach;

- data storage strategies such as shared data in read-only [Axelrod 2018], using database replication mechanism [Wiesmann 2000] or applying a CQRS design [Fowler 2011];

- dark launching to route data changes to specific storages when it comes to isolate testing changes

TDM is something complex and hard to tackle; therefore it should be addressed little by little at any time, from the beginning.

Agilitest’s standpoint on this practice

Because Agilitest is a #nocode scripting tool, Developers usually don’t trust the technology; therefore, many Agilitest Users are business-oriented people. This situation often leads Managers to set a silo oriented organization to quickly achieve test automation in their company. However, this achievement is not the actual goal. As shown above, the mindset introduced by ATDD is basically a matter of communication; spreading tools that enable ATDD is vital for the delivery flow. Sharing concerns about ATDD tools between Developers and Scripters aims at merging the development / test automation ecocycles. This goes notably through

- connecting Agilitest to an existing Jenkins server

- extracting data from databases to take advantage of the Data-Driven feature in Agilitest

Finally, test scripts automation infers minor failures that can block the more important parts of the test from running [Axelrod 2018]; therefore, tests must be short. Some of the Agilitest Users own scripts with a lot of steps: a 3000+ steps script has been recorded! The good news is that it proves the tool is robust; however, it is a better practice to design scripts that verify only one thing.

To discover the whole set of practices, click here.

Related cards

To go further

- [Ambler 2005] : Scott Ambler - c2005 - “Agile Core Practice Executable Specifications” - http://agilemodeling.com/essays/executableSpecifications.htm

- [Argyris 1977] : Chris Argyris - « Double Loop Learning in Organizations » - Harvard Business Review - SEP/1977 - https://hbr.org/1977/09/double-loop-learning-in-organizations

- [Axelrod 2018] : Arnon Axelrod - 2018 - “Complete Guide to Test Automation: Techniques, Practices, and Patterns for Building and Maintaining Effective Software Projects” - isbn:9781484238318

- [Bach 2014] : James Bach & Aaron Hodder - APR 2014 - “Test Cases Are Not Testing” - https://www.satisfice.com/download/test-cases-are-not-testing

- [Beck 2004] : Kent Beck & Cynthia Andres - feb 2004 - “Extreme Programming Explained: Embrace Change” - ISBN : 9780321278654

- [Cohn 2004] : Mike Cohn - 2004 - “User Stories Applied: For Agile Software Development” - isbn:9780321205681

- [Conway 1968] : Melvin Conway - « How do Committee Invent ? » - Datamation magazine - 1968 -http://www.melconway.com/Home/Committees_Paper.html

- [Crispin 2000] : Lisa Crispin & Carol Wade - NOV 2000 - “The Need for Speed: Automating Acceptance Testing in an Extreme Programming Environment” - http://www.qualityweek.com/QWE2K/Papers.pdf/Crispin.pdf

- [Deng 2009] : Chengyao Deng - SEP 2007 - “FitClipse: a Testing Tool for Supporting Executable Acceptance Test Driven Development” - https://www.researchgate.net/publication/221592535_FitClipse_A_Tool_for_Executable_Acceptance_Test_Driven_Development

- [Fowler 2004]. Martin Fowler - MAR 2004 - “Specification by Example” - www.martinfowler.com/bliki/SpecificationByExample.html

- [Fowler 2011] : Martin Fowler - JUL 2011 - “CQRS” - https://martinfowler.com/bliki/CQRS.html

- [Jureczko 2010] : Marian Jureczko & Michal Mlynarski - JAN 2010 - “Automated acceptance testing tools for web applications using Test-Driven Development” - https://www.researchgate.net/publication/234137871

- [Kaner 2002] : Cem Kaner & James Bach & Bret Pettichord - 2002 - “Lessons Learned in Software Testing: A Context-Driven Approach” - isbn:9781118080559

- [Legeard 2021] : Bruno Legeard & Julien Botella - OCT 2021 - “API Testing based on AI-assisted Log Analysis” - https://www.youtube.com/watch?v=Pe9TIbMw5KQ

- [Moe 2019] : Myint Myint Moe - MAY 2019 - “Comparative Study of Test-Driven Development TDD, Behavior-Driven Development BDD and Acceptance Test–Driven Development ATDD” - https://www.researchgate.net/publication/334123683_Comparative_Study_of_Test-Driven_Development_TDD_Behavior-Driven_Development_BDD_and_Acceptance_Test-Driven_Development_ATDD

- [Moustier 2019-1] : Christophe Moustier – JUN 2019 – « Le test en mode agile » - ISBN 978-2-409-01943-2

- [Moustier 2020] : Christophe Moustier – OCT 2020 – « Conduite de tests agiles pour SAFe et LeSS » - ISBN : 978-2-409-02727-7

- [North 2006] : Dan North - « Introducing BDD » - Better Software magazine - Mars 2006 - https://dannorth.net/introducing-bdd/

- [North 2016] : Dan North - JAN 2016 - “Beyond Feature Teams” - https://www.youtube.com/watch?v=EzWmqlBENMM

- [Ries 2012] : Eric Ries - 2012 - « Lean start-up » - Pearson - ISBN 978-2744065088 - http://zwinnalodz.eu/wp-content/uploads/2016/02/The-Lean-Startup-.pdf

- [SAFe 2021-06] : SAFe – FEV 2021 - « s Principle #6 - Visualize and limit WIP, reduce batch sizes, and manage queue lengths » - https://www.scaledagileframework.com/visualize-and-limit-wip-reduce-batch-sizes-and-manage-queue-lengths/

- [Schwaber 2020] : Ken Schwaber et Jeff Sutherland - « The Scrum Guide : The Definitive Guide to Scrum: The Rules of the Game » - NOV 2020 - https://scrumguides.org/docs/scrumguide/v2020/2020-Scrum-Guide-French.pdf or https://scrumguides.org/docs/scrumguide/v2020/2020-Scrum-Guide-US.pdf

- [Smith 2001] : Mark K. Smith - 2001 (updated in 2005) - “Chris Argyris: theories of action, double-loop learning and organizational learning” - www.infed.org/thinkers/argyris.htm

- [Wiesmann 2000] : Matthias Wiesmann & Fernando Pedoney & André Schiper & Bettina Kemme & Gustavo Alonso - FEB 2000 - “Database Replication Techniques: a Three Parameter Classification” - https://www.researchgate.net/publication/3876756

- [Wynne 2012] : Matt Wynne & Aslak Hellesoy - 2012 - “The Cucumber Book: Behaviour-Driven Development for Testers and Developers” - isbn:9781934356807

- [Ye 2013] : Wayne Ye - 2013 - “Instant Cucumber BDD How-to” - isbn:9781782163480